Lesson #84 - Personal Language Model

Kris Durski

We are so grateful for your loyalty and support. You are the reason we do what we do. Thank you for being part of our amazing community. You rock! 👏🤟

Let’s put everything together to see how the PLM can help users navigate today’s digital jungle of resources. Internet search engines, especially those that AI powers are very convenient services for finding information quickly and reliably. However, it may still take several iterations to formulate good queries that lead to desired sources of information. A PDS (Personal Digital Secretary) that implements PLM (Personal Language Model) can perform those searches autonomously and involve the user at the final stage when those resources are located. It may even aggregate the results for more convenience. Besides search engines, specific specialized services such as those related to health, food, households, cars, travel, and many others, could be the ones that the user is looking for and could be located or even requested by PDS on behalf of the user. If it sounds good, keep reading.

We are all familiar with PDAs (Personal Digital Assistants) which keep access to digital resources at our fingertips but also keep our minds focused on them. We can schedule when we should access them and PDAs can meticulously keep reminding about them based on that schedule. Have you had this annoying moment when your PDA reminded you of something at the least desired time? Or did you get too many reminders at the same time to realize that you couldn’t possibly handle them all at one time? I guess it did happen and not just once, right? It’s just life, we keep responding to events and many times don’t realize that we unknowingly agree with having overlapping tasks despite this fancy scheduling tool because tasks take usually longer than planned. Sounds familiar?

So what do you do in those cases? I get it, you don’t have to explain. But what can be done to avoid those difficult and sometimes even embarrassing moments? Simply speaking we need help but not by putting somebody on hold or asking for more time which may be needed if we have to be personally involved. However, many times those tasks can be performed by somebody else or more importantly something else that is intelligent enough to act on our behalf and that’s where PDS comes to mind. You can tell your PDS to not disturb you now so it will sift through events that are coming and only remind you if you must be involved and the event cannot wait. Others will either wait or be handled by PDS.

How is that possible that a machine can be so much entangled in our lives? To answer this question we should revisit the progress that has been made in neural computing. It all started when Warren McCulloch and Walter Pitts introduced the Threshold Logic Unit (TLU) in 1943. It was then turned into hardware implementation by Frank Rosenblatt in 1957 under the name of Perceptron. This was the beginning of an artificial neural network. Initial progress was relatively slow due to difficulty with training neural networks until a practical solution to backpropagation was published by David E. Rumelhart et al. in 1986. The next big step was the introduction of CNN (Convolutional Neural Network) also in the 1980s. CNNs did not pick up on a large scale until the beginning of the 2000s when K. S. Oh and K. Jung showed in 2004 that CNNs could be greatly accelerated using GPUs (Graphic Processing Units). Then in 2017, the first transformer was published although it capitalized on several ideas from previous years. This period summarizes quite a long history of 80 years of evolution of neural computing. During that period not only algorithms dramatically changed but also computing hardware went through significant progress that made it possible to create practical applications utilizing artificial neural networks which are very hungry for computing power.

By now, we already know that transformer neural networks are best suited for processing sequences of concepts that form the vocabulary of a network. However, input and output vocabularies can represent separate sets of entirely different concepts from words of natural language to gene sequences to graphic primitives, and many more.

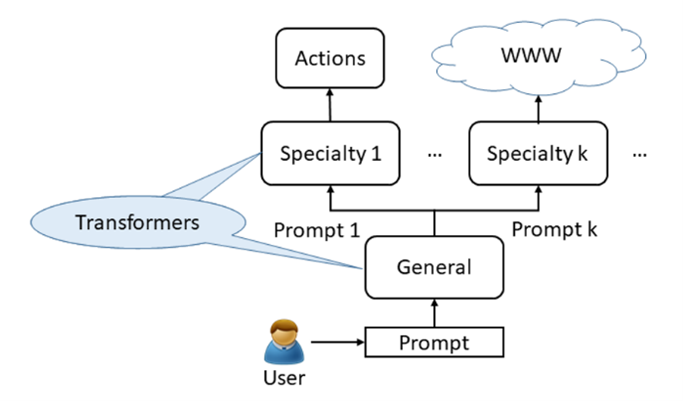

Transformers can translate natural languages, generate biological structures based on genes, create geometrical objects based on captions, perform sequences of actions based on request prompts, etc. PDS is intended to translate human requests into sequences of actions or forward those requests to cloud-based services / AI engines as shown in Figure 1. Structure of PLM Transformers. PLM is capable of extending relatively simple contexts of natural language into simple sequences of words that can be mapped to various concepts such as trainable sequences of actions or prompts requesting services.

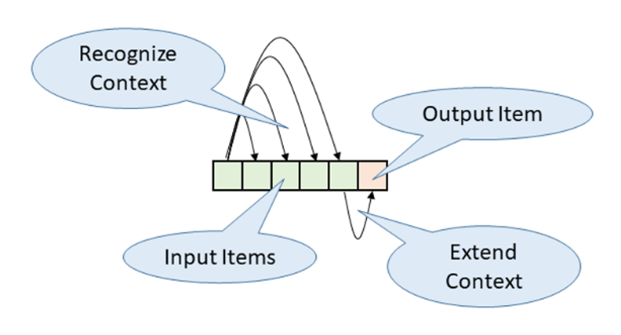

Why is there so much noise about transformers? What’s the big deal? Transformers are being trained to predict words/concepts based on encountered so far words/concepts that is to recognize the context of those words/concepts and be able to continue predicting the context with the words/concepts to follow but not spoken or presented. In other words, someone starts the sentence or paragraph and the transformer is expected to finish it without changing the meaning shown in Figure 2. Context recognition and extending.

That’s pretty much how people take on it though many of them continue within their chosen context which is the opposite of active listening that leads to misunderstanding, confusion, and many times missed opportunities. When training transformers we do our best to avoid that kind of behavior.

In summary, PLM learns the context and the rules of production of a sequence of vocabulary items within that context, not the concepts/words themselves. The same is true with people, the best way to learn a language is to learn the use of words within a context, not just the words which can evaporate from memory very quickly. This does not apply just to languages but any activities where it is important to see, hear, or feel the context of actions/events and then practice makes perfect. PLM is no exception here so it needs additional training and tune-up when it has to apply its knowledge to other areas of activity.

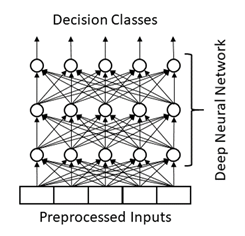

Classification neural networks that include CNNs learn from input data/measurements the classes they belong to so in other words they learn how to assign static objects/entities to their classes as shown in Figure 3. Classification neural network. Transformers, on the other hand, learn dynamic relationships between concepts within a given sequence so they can predict a continuation of a sequence of concepts based on the provided starting sample of that sequence. This makes them particularly useful for predicting a sequence of events based on observation of events that have already happened, e.g. predicting the reactions of public investors on a stock market or the trajectory of an airplane given the settings of controls and observed flight path. These examples should already tell you that transformers can make similar predictions as people can do, but if the transformer network is large enough it can include more factors than people can do and thus be more precise in those anticipations. Being able to predict and make decisions about what should be done next, combinations of CNNs and transformers can create powerful alternatives to human operators. They can be fast partners in knowledge retrieval based on provided observations, unlike databases where the right keywords must be known upfront, transformers can guess context to get the right content.

PDS is certainly less powerful than cloud-based AI services due to the limited power of edge devices, but its role is to navigate between those services to get proper help from them at the right time. As a personal digital secretary, it has to have access to more personal data which should never be passed to cloud-based services so PDS is a buffer between local handling and the cloud see Figure 4. Requests to PDS. The main goal of PDS is to protect user data and help handle the Vault Security platform, which includes but is not limited to authentication and authorization services.

Thank you for reading our newsletter and stay tuned for more updates from Vault Security!

It’s been a pleasure to share this article with you today. I hope you found it informative and interesting. I’ll be back next Sunday with more insights and stories. Until then, I wish you a great day and all the best.👋.